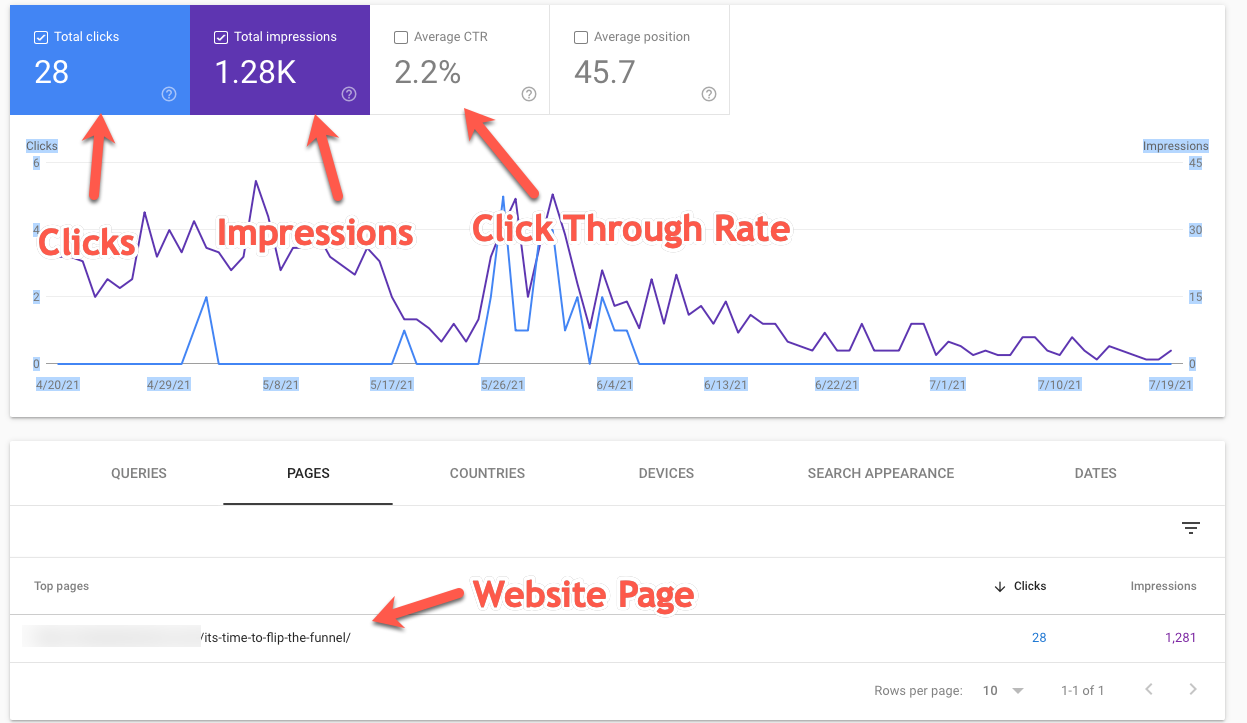

The click rate (CTR) could exceed the PageRank in the top Google search result

The click through rate (CTR) could exceed the PageRank in the top Google search result

If, as the joke says, we assume that the best way to hide a corpse is on page two of Google search results, we’ve laid the foundation that Google has a lot of click-to-play data on page one, but a lot less after this point.

And all CTR (click through rate) studies show (to my knowledge) that the vast majority of clicks on organic tabs reach positions 1-10 or (1-11).

If we study enough keyword search terms, we can use a CTR model like the one above to get an idea of where the traffic is likely to come from.

However, if we take the CTR out of the equation for a second and just focus on the keyword rankings (I already hear an angry crowd gathering with the suggestion)

we can see how those rankings fluctuate over time, what obviously affects our organic side is the traffic.

Where these fluctuations seem to occur has some interesting implications for what drives these rankings in the first place: Most of the fluctuations occur after the third page.

Click Through Rate – Why Could This Happen?

The fluctuation of some SERPs is very high due to QDF (the query deserves an update). For example, a user searching for the name of a sports team wants to find the team’s official website, but also want to find the latest results, games, news and gossip.

As a result, Google “updates” your results much more frequently or even in real time.

It could be argued that good rankings on certain keywords are the result of the website being well optimized for lucrative keywords and largely ignored for non-income keywords (which is fair enough), but the content isn’t. on these other pages or on the pages of other important industry pages.

And on the same subject, assuming Digital Marketing & SEO experts are responsible for ensuring that the website ranks well for certain keywords,

we should assume that almost all websites are in the SERPs (Multiple SERPs – charts show several hundred keywords on the first few pages) exactly the links are getting the same rate, or that links are not a factor at all.

In our opinion: The fluctuations are due to the fact that the ranking factors after the second page are very different compared to the first 10 or 20 results. Other factors have a higher priority after this point – for example PageRank.

On the first or two first pages, Google has enough click data to create a robust ranking algorithm. From this point on, you no longer have the data you need.

Click Through Rate – What Keywords Are We Looking For ?

In order for the graphics to be generated, we have to enter the keywords manually. The keywords we visualize are strictly keywords that we want to see – no branded terms and no unwanted terms from blog posts that are off-topic.

As a result, we can get a better picture of an industry, rather than just a picture of a website’s overall visibility for each keyword it is ranking for, whether accidentally or not.

When (not specified) it was impossible to assign search traffic to specific keywords in the analysis, it became necessary to track keyword rankings more frequently and in larger numbers.

While in the past a client’s SEO report could have included the 100 most important keywords to us, today we track thousands of keywords for the companies we work with.

In the event of fluctuations in traffic, we need to know where we have potentially lost or gained visitors.

Obviously, while we’re reporting a lot more keywords now than ever before, it doesn’t change what a customer is or isn’t ranking for.

We have a more comprehensive list of opportunities – keywords that are relevant but not the best performing (bottom of page 1, top of page 2, etc.) metadata or content, for example

We can “bundle” or aggregate a large number of keywords and estimate traffic for a particular service we offer or a location in which we operate.

We can make an informed decision about whether a single page is relevant to too many terms and therefore should create a separate page or subpage, or when two pages are competing for the same search rank and need to be combined to deliver a better user experience.

The obvious downside is that we’ve moved from a model where we can track 100 keywords that are driving traffic to a model where we report those 100 keywords plus over 900 potentially better or worse performers. a lot of traffic, which, in large part, we would call a customer wasting time reporting to their supervisors.

Because of this, we designed a dashboard that allows us to present this information in a meaningful way so that customers can filter the information however they want without removing the granularity we need to monitor.

In Our Company We build your digital marketing strategy and online channels to increase your brand’s exposure with social media marketing, PPC, conversion optimization, SEO-friendly web content, to connect with your current and prospective customers. we also offer web mobile development.

Click Through Rate – Why Is That Important ?

The difference between positions one and two for a competing keyword is the number and quality of the links, right? Does Site B just need more and better links to outperform Site A? Not correct. This has not been the case for a long time.

We have been saying for years that if you have enough links to rank fifth, you have enough links to rank first.

The impact is also interesting across industries that we’re looking at and I’ll be posting another blog post soon. It seems to us that Google is reducing the prevalence of PageRank in the SERPs that it considers “spam”.

In gaming, for example, the CTR is a much stronger ranking signal than in other industries – websites with better sign-up bonuses etc. get more clicks and therefore tend to rank quite well. From a search engine point of view,

there is no point in treating all websites equally when nine of the top 10 are buying en masse inferior links.

SHARE

Stay in touch

To be updated with all the latest news, offers and special announcements.